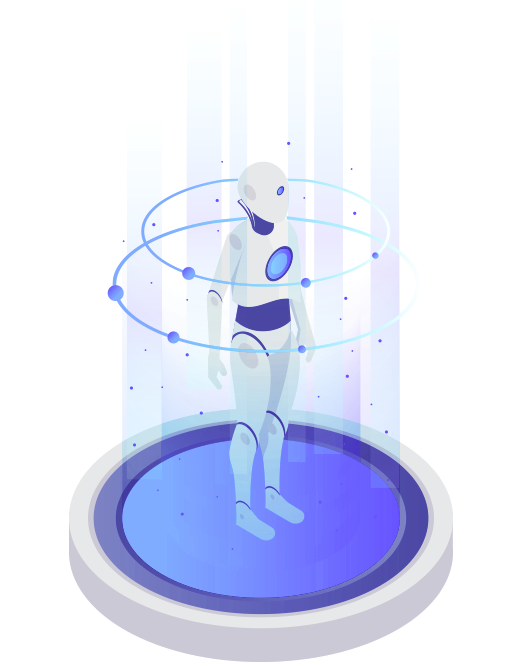

Product Introduction

Based on human-like features, the multi-modal virtual AI technology adopts the capture technology of real human facial expressions and movements, the technology of matching voice synthesis with facial expressions and movements, and the technologies of speech recognition, semantic understanding, and voice synthesis to achieve intelligent speech interaction. Through the collection and mining of big data, a data exchange standard and system are developed to organize, summarize and classify existing open resources and industry-related data. Combining intranet and internet data, intelligent conversation is achieved through technologies such as Automatic Speech Recognition (ASR), Natural Language Processing (NLP), Text to Speech (TTS) and Deep Dynamic Neural Network (DDNN) simulation computation.

Product Advantages

-

Using motion capture technology with real human facial expressions and movements

-

Using the technology that combines voice synthesis with matching facial expressions and movements

-

Utilizing technologies such as speech recognition, natural language understanding, and voice synthesis to achieve intelligent voice interaction functionality

Function description

-

User intent recognition and intelligent response

User intent recognition and intelligent response -

Rich library of body movements

Rich library of body movements -

Integration of 3D character body movements

Integration of 3D character body movements -

Full-mode voice interaction

Full-mode voice interaction -

Customization of virtual character's voice

Customization of virtual character's voice -

Face recognition and automatic wake-up

Face recognition and automatic wake-up -

Identity recognition and business guidance

Identity recognition and business guidance -

Speech recognition and intelligent interaction

Speech recognition and intelligent interaction

Application scenarios

-

Banking | Securities | Finan...

-

Education industry

-

Television media

-

Intelligent automobiles

-

Public transportation

-

Digital cities | Smart commu...

京ICP备05046823

京ICP备05046823